Price Points by Omnia Retail

24.02.2026

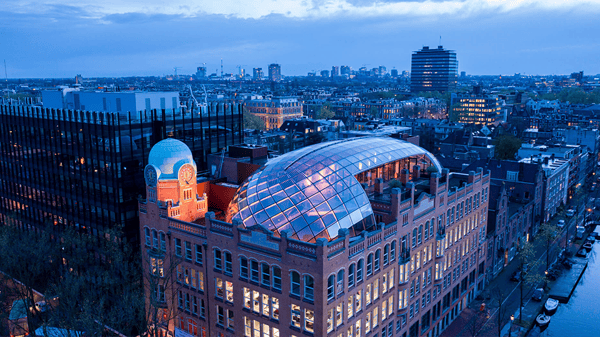

AI Dynamic Pricing: The Future of AI-Driven Retail and DTC Pricing

Retail pricing has changed. Markets move in hours, not weeks. Competitors update faster, marketplaces amplify transparency, and pricing teams are expected to protect margin while staying competitive across thousands (or...

Retail pricing has changed. Markets move in hours, not weeks. Competitors update faster, marketplaces amplify transparency, and pricing teams are expected to protect margin while staying competitive across thousands (or millions) of SKUs. That’s where AI Dynamic Pricing Software and AI Pricing Software come in: not as a buzzword, but as the operational layer that turns market signals into controlled pricing decisions at scale. This article combines the most useful parts of three core topics—dynamic pricing foundations, how AI changes pricing execution, and what "agentic" pricing means in practice—into one updated, non-generic guide. You’ll learn what AI dynamic pricing actually is, why it matters, how it works, and how pricing teams implement it without losing transparency or control. What Is AI Dynamic Pricing in Retail? AI Dynamic Pricing is pricing that updates continuously because software interprets market signals and executes pricing logic in near real time. It is not simply about changing prices frequently. It is about translating complex inputs—competitor movements, marketplace dynamics, demand shifts, inventory pressure, and category behavior—into structured decisions that teams can govern, test, and scale. In practice, AI Dynamic Pricing Software monitors the market across channels such as webshops, Google Shopping, marketplaces, and competitor sites. It detects what has changed and applies your strategy rules or optimization objectives to decide where prices should move, how far they should move, and when changes should happen. This matters because manual pricing cycles cannot keep pace with modern retail dynamics. Dynamic pricing describes the outcome: prices change. AI dynamic pricing describes the mechanism: software interprets signals and executes decisions at scale. When implemented well, it reduces spreadsheet dependency and shifts pricing teams toward strategic steering—defining margin guardrails, price position targets, brand constraints, and category priorities. AI Dynamic Pricing vs Personalized Pricing Personalized pricing adjusts prices at the individual level based on user behavior or purchase history. While technically powerful, it can raise trust concerns and regulatory risk when customers discover price differences for identical products. AI Dynamic Pricing responds to market context instead of personal identity. It reacts to competition, demand, inventory levels, product lifecycle stage, and channel dynamics. This approach helps retailers and brands remain competitive and protect margins while keeping pricing consistent, explainable, and aligned with brand strategy. Why AI Dynamic Pricing Matters in E-commerce E-commerce fundamentally changed pricing behavior. Comparison shopping and marketplaces made prices transparent, while the speed of price changes increased dramatically. The challenge today is not whether prices can be updated, but whether the right prices can be updated quickly, safely, and consistently across channels. Consumer electronics illustrates this clearly. Short product life cycles, frequent competitor changes, and high price sensitivity demand systems that continuously recalculate prices without sacrificing margin control or brand positioning. As online share grows, the same dynamics appear in other categories. Price transparency increased: shoppers compare prices instantly, making even small differences commercially relevant. Price changes became constant: retailers now set market-driven prices multiple times per day using live signals. What AI Pricing Software Enables Modern AI Pricing Software reshapes daily pricing operations. It is not only about being cheaper than competitors, but about managing price position and margin coherently across categories, regions, and channels. Protect margin while remaining competitive AI dynamic pricing allows teams to defend competitiveness where it matters most, while preserving margin where demand is less elastic. Rules and guardrails prevent destructive discounting. Respond to competitor changes without manual effort Instead of monitoring competitors SKU by SKU, AI Dynamic Pricing Software tracks the market continuously and executes strategy automatically across large assortments. Use pricing to improve inventory outcomes AI-driven pricing supports inventory health by adjusting prices based on stock depth, sell-through speed, and lifecycle stage—without undermining brand integrity. Base decisions on market reality By connecting competitor data, demand signals, performance metrics, and inventory positions, AI Pricing Software replaces intuition with evidence and makes pricing decisions easier to defend internally. How AI Dynamic Pricing Software Works Traditional dynamic pricing relies on manual promotions and seasonal markdowns. AI Dynamic Pricing Software integrates market data, internal constraints, and business logic into a system that executes pricing decisions continuously across online and offline channels. For teams evaluating vendors, structured comparison is essential: How to buy pricing software for retailers . AI Dynamic Pricing also extends into physical stores through electronic shelf labels (ESLs) , enabling synchronized pricing across channels with minimal operational friction. From AI Dynamic Pricing to Agentic Pricing Many AI pricing tools excel at execution but still require significant manual analysis. Dashboards show what happened, but pricing managers must interpret signals and connect them to strategy. Agentic Pricing addresses this gap by adding a reasoning layer on top of AI Dynamic Pricing Software. Ask pricing questions in natural language Receive contextual explanations and recommendations Maintain full transparency and control Practical Agentic Pricing Use Cases Monitor match rate evolution instantly Match rate indicates how much of your assortment is directly comparable to competitors. When it drops, competitive visibility is at risk. Agentic AI allows teams to request trend analysis instantly instead of building reports manually. Identify structural overpricing Structural overpricing quietly reduces competitiveness. Agentic Pricing surfaces products priced significantly above market benchmarks and explains whether this is driven by category behavior, competitor shifts, or pricing rules. Conclusion Dynamic pricing is becoming the default. The real differentiator is whether AI Pricing Software helps teams understand market behavior and act with confidence. Systems that combine execution with explanation will define the next generation of pricing. The future of pricing is not just dynamic. It is agentic. Interested in seeing how AI Dynamic Pricing works in practice? Book a demo. Frequently Asked Questions about AI Dynamic Pricing Read the most relevant questions about AI Dynamic Pricing. Got more questions? Get in touch. What is AI Dynamic Pricing Software? AI Dynamic Pricing Software is software that continuously and automatically adjusts prices based on real-time market data such as competitor prices, demand signals, inventory levels, and product performance. Instead of manual price updates, it uses AI models and pricing rules to optimize prices at scale while keeping decisions transparent and controllable. Read More What is AI Dynamic Pricing Software? Is AI Dynamic Pricing the same as personalized pricing? No. AI Dynamic Pricing adjusts prices based on market conditions, not individual customer data. Personalized pricing changes prices per user and can raise privacy and trust concerns. AI Dynamic Pricing focuses on competition, demand, inventory, and channel dynamics, making it scalable, transparent, and suitable for retail and DTC environments. Read More Is AI Dynamic Pricing the same as personalized pricing? What is the difference between AI Pricing Software and traditional dynamic pricing? Traditional dynamic pricing focuses mainly on changing prices frequently, often using fixed rules or manual input. AI Pricing Software goes further by analyzing large volumes of data, identifying patterns, and executing pricing decisions automatically. Modern AI pricing solutions can also explain why prices change and what impact those changes have on margins and competitiveness. Read More What is the difference between AI Pricing Software and traditional dynamic pricing? Which businesses benefit most from AI Dynamic Pricing Software? AI Dynamic Pricing Software is especially valuable for retailers and brands that have: large or fast-changing assortments, high price sensitivity and strong competition, mMultiple sales channels (webshop, marketplaces, physical stores) or a need to actively manage margins and price positioning. Both enterprise retailers and growing DTC brands benefit from AI-driven pricing execution. Read More Which businesses benefit most from AI Dynamic Pricing Software? How does AI Pricing Software help protect margins? AI Pricing Software protects margins by considering more than just the lowest competitor price. It incorporates demand elasticity, inventory pressure, lifecycle stage, and strategic pricing rules. This allows businesses to stay competitive where it matters while avoiding unnecessary discounts and preserving profitability. Read More How does AI Pricing Software help protect margins? What is Agentic Pricing and how does it build on AI Dynamic Pricing? Agentic Pricing is the next evolution of AI Pricing Software. In addition to executing pricing strategies, agentic AI analyzes market behavior, explains what is happening, and recommends next actions. Instead of only showing dashboards, it answers pricing questions in natural language, helping pricing teams make faster, more confident decisions with full transparency. Read More What is Agentic Pricing and how does it build on AI Dynamic Pricing? Read more What are the best pricing strategies? What is Price Monitoring? What is Value-Based Pricing? What is Charm Pricing? What is Penetration Pricing? What is Bundle Pricing? What is Cost Plus Pricing? What is Price Skimming? What is MAP Pricing?

AI Dynamic Pricing: The Future of AI-Driven Retail and DTC Pricing.png?width=600&name=ORA%20Visuals%2020252026%20(11).png)

10.02.2026

Agentic Pricing Explained: The Next Generation of AI Pricing Software

From Automation to Intelligence: The Real Meaning of Agentic Pricing In our previous article on Agentic Pricing for Retail, we introduced Agentic Pricing as the next evolution of AI pricing software. We explained how...

From Automation to Intelligence: The Real Meaning of Agentic Pricing In our previous article on Agentic Pricing for Retail, we introduced Agentic Pricing as the next evolution of AI pricing software. We explained how adding a natural language intelligence layer to structured pricing logic changes how teams interact with data. But to truly understand the significance of Agentic Pricing, we need to go beyond features and interface improvements. Agentic Pricing is not simply a new module or an AI add-on. It represents a structural shift in how pricing systems operate and how pricing teams make decisions. What Agentic Pricing Actually Means Traditional AI pricing tools focus primarily on execution. They monitor competitor prices, apply rule-based logic or optimisation algorithms, and update prices automatically. This has transformed retail and DTC pricing over the past decade. However, execution alone does not equal intelligence. Pricing managers still spend significant time navigating dashboards, filtering data, exporting reports, validating assumptions, and interpreting results. Even the most advanced AI dynamic pricing software requires human interpretation to connect signals to strategy. Agentic Pricing changes this dynamic. An agentic system does not just execute rules. It understands intent. It retrieves relevant structured data. It performs contextual analysis. And crucially, it explains the outcome in clear language. In other words, agentic pricing software introduces reasoning capability into pricing environments. This has three major implications: Insight velocity increases dramatically. Questions that previously required multiple dashboard steps now require one sentence. Cognitive load decreases. Pricing managers spend less time searching and more time deciding. Strategic clarity improves. The system does not just return numbers: it connects patterns across data dimensions. For retailers operating thousands of SKUs and dynamic competitive landscapes, this changes how quickly they can respond to market shifts. For brands working in DTC environments, it strengthens the link between price positioning, margin, and brand strategy. Agentic Pricing transforms AI pricing software from a rule executor into an analytical collaborator. Why This Shift Matters for Retailers and DTC Brands Retail pricing complexity has increased exponentially. Assortments grow. Competitors adjust daily. Marketplaces intensify price transparency. Meanwhile, internal stakeholders expect faster, more data-backed decisions. AI pricing for retail has already improved execution speed. But as complexity rises, the bottleneck shifts from execution to understanding. The same applies to AI pricing for DTC brands. Direct-to-consumer players must balance competitiveness, contribution margin, and brand perception. Pricing decisions cannot be purely reactive. They require contextual awareness. Agentic Pricing addresses this new bottleneck. It closes the gap between data and decision-making. Instead of asking “Where do I find this insight?”, pricing managers ask “What is happening?” and receive structured, contextualised answers. This is exactly where the Omnia Agent turns vision into daily impact. While the Omnia platform continues to deliver high-quality competitor pricing insights and execute your pricing strategy, the Agent removes the manual work around analysis. Instead of digging through dashboards and exporting reports, you simply ask your pricing question in natural language. The Agent accesses your data, runs the analysis, and returns clear, contextual answers — often supported by visualisations. The following examples show how this works in practice today. Use Case 1: Monitoring Match Rate Evolution Without Manual Analysis Match rate is one of the most critical indicators in competitive pricing. It measures how much of your assortment is directly comparable to competitor products. A decline in match rate may signal data issues, competitor assortment shifts, or blind spots in competitive coverage. Traditionally, analysing match rate evolution requires navigating reporting modules, adjusting date ranges, generating visualisations, and interpreting trends manually. With the Omnia Agent, the workflow changes entirely. A pricing manager can simply ask: “Get me a graph with the match rate evolution of the last 4 weeks.” The Agent retrieves historical match rate data, generates a visual representation, and provides context around fluctuations. It can identify whether changes are isolated to specific categories or structural across the assortment. This is where agentic pricing for retail demonstrates its value. Instead of manually validating data health, teams receive immediate insight into competitive visibility. If match rate declines in a high-revenue category, corrective action can begin instantly. The time saved is significant. More importantly, the quality of awareness improves. The Agent does not merely show a graph; it explains what the movement implies. Use Case 2: Identifying Structural Overpricing Versus Market Average One of the most common revenue risks in retail and DTC environments is structural overpricing. Not minor competitive gaps, but systematic price positioning above the market average that reduces conversion and competitiveness. Detecting this manually requires filtering product groups, comparing indexed prices, and analysing competitor spreads. With Agentic Pricing, the interaction becomes strategic rather than operational. A category or pricing manager can ask: “For which products am I significantly overpriced compared to the market average?” The Omnia Agent evaluates price indices across matched products, applies predefined deviation logic, and surfaces items that structurally exceed competitive benchmarks. It connects this to category context and relative price positioning. For retailers, this reduces the risk of silent revenue leakage. For brands using AI pricing for DTC strategies, it prevents gradual competitiveness erosion that can harm performance marketing efficiency. Crucially, the Agent does not stop at detection. It provides explanation. Is overpricing driven by a specific competitor? Is it concentrated in one category? Is it linked to a recent strategy update? This is the defining characteristic of agentic pricing software. It connects execution data with contextual reasoning. The Strategic Implication Agentic Pricing represents more than convenience. It reshapes how pricing teams operate. It reduces operational friction and increases strategic focus. Retailers gain faster awareness of market dynamics. DTC brands gain clearer control over margin and positioning. Pricing managers gain time: not by automating decisions blindly, but by accelerating understanding. The future of AI pricing software is not defined by automation alone. It is defined by systems that understand, reason, and explain. The future of pricing is not just dynamic. It is Agentic.

Agentic Pricing Explained: The Next Generation of AI Pricing Software

29.01.2026

Retail Trends for 2026: A Look Into the Retail Crystal Ball

The retail industry in 2026 looks fundamentally different from even just two years ago. Pricing used to be a quarterly exercise: spreadsheet analysis, competitive positioning, maybe some seasonal adjustments. Now it's a...

The retail industry in 2026 looks fundamentally different from even just two years ago. Pricing used to be a quarterly exercise: spreadsheet analysis, competitive positioning, maybe some seasonal adjustments. Now it's a real-time discipline, powered by predictive intelligence and shaped by consumer expectations that shift faster every year. The retailers and DTC brands winning right now treat pricing as a strategic capability rather than a reactive tactic. Deloitte's 2026 Retail Industry Outlook found that nearly all retail executives expect higher costs in 2026, yet most anticipate margin increases anyway. The math seems impossible until you look at how they're achieving it: precision pricing strategies that balance profitability with customer trust. Let's look into the retail crystal ball and see what the new year has in store for e-commerce. What the 2025 Holiday Season Tells Us About 2026 The 2025 holiday season was a preview of where retail is heading. Black Friday 2025 saw $79 billion in global online sales, up about 6% year over year. Shopify merchants alone generated $14.6 billion over the four-day period, a 27% increase from 2024. But the headline numbers don't tell the full story. What's interesting is how people shopped. In-store traffic in the US declined by 3.6% compared to 2024, but consumers aren't abandoning physical retail; they're just approaching it differently. "The era of the impulse holiday spree is ending," RetailNext's global manager of advanced analytics told Forbes. "Consumers are in control, and they're treating Black Friday as one data point in a much longer hunt for value." Salesforce found that online discount rates remained flat year-over-year, with average discounts peaking at just 28% in the US and 27% globally. Retailers are becoming more strategic about where and when they discount. Coach is a telling example. The brand has "deliberately moved away from deep discounting over the past several years." It's a bet on brand equity over short-term volume. In Europe, the holiday season showed a different pattern. In Western Europe, Black Friday saw Computers and Gaming outperform Consumer Electronics, driven by replacement cycles as consumers upgraded pandemic-era devices and responded to the end of Windows 10 support. "Is This Worth My Money?" The Value-Seeking Consumer If the past few years taught retailers anything, it's that consumers have permanently recalibrated their understanding of value. What started as inflation-driven belt-tightening has evolved into something more structural. 81% of European shoppers say inflation is changing how they buy. 31% have switched to more affordable brands, and 21% wait for sales or discounts before shopping at all. Nearly seven in 10 retail executives agree that value-seeking behaviours represent a structural change within the industry. But value doesn't just mean "cheap." As much as 40% of consumer perceptions of a brand's value stems from factors other than price: quality, customer service, checkout experience, and loyalty programmes all factor into whether a customer perceives your pricing as fair. Why Almost Every Brand Is Moving Upmarket Here's a trend that might seem counterintuitive during cost-conscious times: brands across every segment are raising prices and moving upmarket. The BoF-McKinsey State of Fashion 2026 report documents this shift in detail. Value brands like Bershka and H&M have reduced the share of SKUs in their lowest price tiers in the UK by 15 to 25% between 2023 and 2025. Mid-market players are tapping into growing demand for "affordable aspiration." And premium brands are seizing white space created by luxury price increases, which rose 61% on average between 2019 and 2025. What's driving this? Two forces are pushing brands toward premium positioning From below: Ultra-low-cost rivals like Shein and Temu have made competing on price nearly impossible. When Shein faced tariff increases that pushed certain item prices up 377%, Inditex responded not by matching prices, but by reviving its budget brand Lefties as a direct competitor with the advantage of physical stores. From above: Luxury brands have raised prices so aggressively that aspirational consumers are opting to spend their disposable income elsewhere. On Running is a masterclass in premium positioning. The Swiss footwear brand reported record Q3 2025 results: 794 million Swiss francs in revenue, up 25% year over year. While competitors rely on discounting, On maintains $180 average selling price compared to Hoka's $160, earning 65.7% gross margins versus the industry average of 45-50%. "We want to separate ourselves even more from our competitors, so we are in the position to increase prices and we will do this," co-CEO Caspar Coppetti said on an earnings call. That confidence comes from deliberate brand building over years. Even luxury houses are expanding into new categories: Loro Piana launched a dedicated ski capsule collection for Fall/Winter 2025-26, featuring technical innovations like Techno Bi-Stretch 3L Storm fabric made from yarn derived from coffee waste. It's luxury performance wear, priced accordingly, for a market segment that didn't exist a decade ago. Can't Spell Retail Without AI: Agentic AI Changes Everything In 2026, the conversation around AI in retail has shifted. It's no longer about whether to adopt AI-powered pricing. It's about understanding what separates the tools that deliver results from those that don't. Deloitte's 2026 Retail Outlook found that 68% of retail executives expect to deploy agentic AI for key operational and enterprise activities within 12 to 24 months. But what actually makes agentic AI different from the AI tools retailers have experimented with for years? From assistive to autonomous Traditional AI in retail has been assistive: chatbots that answer questions, recommendation engines that suggest products, and analytics dashboards that surface insights for humans to act on. These tools wait for instructions. They respond to prompts. They require someone to interpret results and decide what to do next. Agentic AI operates differently. These systems combine three capabilities that earlier AI lacked: memory (retaining context across interactions), reasoning (evaluating options against goals), and tool use (taking actions in external systems). Instead of surfacing an insight and waiting, an agentic system can detect a problem, evaluate possible responses, execute a solution, and learn from the outcome. The difference matters. McKinsey research suggests that merchants using agentic AI could reclaim up to 40% of their time currently spent on reporting and execution. That's not because the AI generates better reports. It's because the AI handles the entire loop: monitoring data, identifying what needs attention, and acting on it within predefined guardrails. Consumer-facing agents are already here On the consumer side, agentic commerce is moving fast. Salesforce reported that $14.2 billion in global online sales on Black Friday were driven by AI agents. Shoppers are using ChatGPT, Claude, Perplexity, and other tools to research products, compare prices, find discounts, and get gift recommendations. The major platforms are racing to own this layer. In 2025, OpenAI partnered with Walmart, Target, Instacart, and DoorDash to let shoppers complete purchases within ChatGPT. Amazon released "Buy For Me," an agentic tool that lets consumers shop other retailers without leaving Amazon's app. Google rolled out agentic checkout options. Perplexity partnered with PayPal just before Black Friday. What makes these agents different from a search engine? They don't just return results. They evaluate options against your criteria, remember your preferences, and can complete transactions on your behalf. As one retail analyst put it: "AI bots aren't looking at display ads. They're looking at the inherent quality and metadata of the product, including its price." What agentic AI means for pricing teams The same principles that make consumer agents powerful apply to the operational side of retail. Instead of pricing analysts pulling reports, spotting anomalies, building recommendations, and waiting for approval cycles, agentic systems can compress that entire workflow. A few examples of what this looks like in practice: Continuous monitoring without dashboards. Rather than checking competitor prices on a schedule, agentic systems watch for meaningful changes and surface only what requires attention. A competitor undercutting you on a key SKU, a pricing anomaly across channels, an opportunity in a category where you have margin room: the system flags these proactively instead of burying them in a weekly report. Execution within guardrails. The most useful agentic pricing systems don't require human approval for every change. They operate within defined parameters (floor prices, ceiling prices, margin thresholds, competitive positioning rules) and adjust automatically when conditions warrant. Humans set strategy; the system handles execution. Explainability built in. Unlike black-box algorithms, well-designed agentic systems can show exactly why a price changed: which rule triggered, what data informed the decision, and what the expected impact is. This matters for internal alignment (pricing, category, and finance teams seeing the same logic) and increasingly for regulatory compliance. Omnia Agent is built on these principles. It monitors pricing data continuously, surfaces insights through a conversational interface, and operates within transparent guardrails that pricing teams define. When a price changes, the reasoning is visible: what triggered it, which rules applied, and what outcome is expected. It's not a bolt-on AI feature. It's how pricing software s The Profitability vs. Competitiveness Tightrope The tension between maximising profitability and remaining competitive has never been sharper. Amazon prices rose 5.7% through September 2025, while Target and Walmart prices increased just 1.7% each. The disparity stems largely from Amazon's reliance on third-party sellers, who face sharper impacts from tariffs and have fewer tools to absorb costs. Target has been particularly vocal about its strategy. The company held prices steady on back-to-school items like crayons, notebooks, and folders from 2024 to 2025, positioning itself as a value leader while selectively raising prices elsewhere. Walmart took a similar approach, noting it has permanently lowered prices on 2,000 items since February. In Europe, Carrefour announced a €1.2 billion savings plan to fund price cuts and preserve competitiveness, demonstrating how major grocers are prioritising strategic price investments even as margins compress. Major retailers are employing sophisticated portfolio approaches to pricing. Deloitte's research shows that 73% of retailers plan to gradually adjust retail prices upward in 2026, while 72% intend to shift their product mix toward higher-margin or value-added items. These tactics work together with dynamic pricing capabilities to protect profitability without alienating customers through sudden, dramatic price increases. Precision matters more than ever. Blanket pricing strategies that treat all products the same are not meeting market needs effectively. Some items can command higher margins because of brand equity, unique features, or timing. Others require aggressive positioning to drive volume and market share. What This Means for Your Pricing Strategy Looking at the landscape of 2026, several strategic imperatives emerge for pricing teams. Invest in predictive capabilities. The gap between retailers with sophisticated pricing intelligence and those relying on manual processes is widening. Predictive analytics, elasticity modelling, and AI-powered forecasting are no longer nice-to-have features. Think holistically about value. Price is one component of how customers perceive value. Experience, service, product quality, and convenience all factor into the equation. Automate at scale. Manual pricing processes can't keep pace with market dynamics in 2026. Automation frees teams to focus on strategy while ensuring prices remain competitive and optimised in real time. Forecasts suggest more than 70% of European retailers may operate with real-time automated pricing by the end of 2026. Prepare for agentic commerce. When AI agents are influencing purchasing decisions, your product data, pricing logic, and transparency become critical competitive advantages. Build ethical frameworks. As AI capabilities expand, so does regulatory scrutiny. New York now requires businesses to disclose when they use personal data to deliver individualised pricing. European regulators are expanding rules to cover dynamic marketplace pricing. Retailers who build transparent, ethical pricing practices now will avoid compliance issues later. Test and iterate constantly. The market moves too fast for annual pricing reviews. Modern pricing strategies require continuous testing, monitoring, and adjustment. The retailers thriving in 2026 aren't necessarily those with the biggest budgets or the most SKUs. They're the ones who have embraced pricing as a dynamic, strategic discipline powered by technology and guided by customer insight. As costs rise and competition intensifies, precision in pricing becomes the difference between profitable growth and margin erosion.

Retail Trends for 2026: A Look Into the Retail Crystal Ball

22.01.2026

The Future of Pricing Is Agentic. And Today, It Begins

Bringing Some Breakthrough News It has been tough to keep quiet over the past months as the Omnia team has been excited about the technology breakthrough we have achieved. Today, we are ready to formally launch and...

Bringing Some Breakthrough News It has been tough to keep quiet over the past months as the Omnia team has been excited about the technology breakthrough we have achieved. Today, we are ready to formally launch and announce it. In the past months, we have embraced the rapid advances in AI technology and added a natural language interface to our software: the Omnia Agent. The strict logic of the Omnia platform and its large database of historical pricing data, combined with the latest AI technology, unlock this new, easy-to-use, and powerful Agentic Pricing Platform. Introducing the Omnia Agent The Omnia platform will continue to do what it is best at: give you high-quality competitor pricing insights, and execute your pricing strategy. The added Omnia Agent will become your best-ever and fastest-ever pricing and assortment advisor, working seamlessly alongside you to reach your commercial objectives. Every pricing team knows the feeling. Spending hours every week answering the same questions, digging through dashboards, exporting data, and double-checking assumptions. Knowing the answer is in the data somewhere, but not knowing where to start. Or simply not having the time to look at everything you should be looking at. That is why we built the Omnia Agent. The beta version is live today, lets you ask pricing and market questions in natural language, just as you would ask a pricing analyst. The Agent combines deep pricing knowledge with direct access to your data and market data to run the analysis for you and return clear, plain language answers. Where helpful, those answers are supported by tailored visualisations directly in the chat. This is not about replacing dashboards with a chat box. It is about removing the manual work, the guesswork, and the time pressure, so you can get to solid pricing insights in seconds rather than hours. Here are a few examples of questions that could be answered by the Omnia Agent: Which competitors show a significant change in price positioning over the past month compared to before? Which of my categories or brands are seeing the biggest shifts in competitiveness? Give me an analysis of competitor behavior change for category laptops in the past 3 months What categories could I increase my margin on? We believe that market insights delivered this way will be easier to grasp for most users than using dashboards. Also, while we believe dashboards will always have a role for key insights that our users want to frequently evaluate, market insights via the Omnia Agent provide unlimited flexibility: as long as the data points for your question live somewhere in the market insights database, Omnia Agent will answer the question you have. Best of Both Worlds Pricing technology historically either followed a rule-based system or an algorithmic optimization approach. Rule-based systems require substantial management effort in setup and refinement. They then provide pricing managers with a clear understanding of “what’s running” and strong flexibility and control over pricing execution. Algorithmic optimization systems offer simplicity and are more hands-off as they largely run and optimize towards the objectives you set. Yet the resulting black box outcomes and lack of control can give undesired outcomes or discomfort, and the actual performance often is worse than that of rule-based systems. The Omnia platform with the new Omnia Agent is a classic best of both worlds solution: offering the business logic and flexibility of rule-based systems, with the intelligence and proactivity of algorithmic optimisation. As a user, you will gain higher quality insights, realise substantial time savings but keep full transparency on each recommendation, meaning you stay in control of your pricing. Shaping the Future of Pricing As we take this first step into the agentic era, our mission remains unchanged: We give retailers, brands, and their teams Superpowers by unleashing the full potential of pricing through market data, insights, and automation. But the way we achieve that mission will evolve faster than ever before. Agentic technology opens a path toward pricing systems that are not just tools but teammates. Systems that understand your goals, proactively help you reach them, and explain every decision along the way. The basis of pricing is having the best data combined with the intelligence to spot patterns in the data. However, the true differentiator is translating intelligence into real-world impact - clearly, transparently, and in a way that elevates every member of the commercial team. With the Omnia Agent, we are building that future deliberately, step by step, in close collaboration with our customers. This launch is more than a product milestone. It is a re-commitment to the values that have guided Omnia from the start: transparency, flexibility, and a relentless focus on enabling better decisions. Agentic pricing amplifies all of these. It brings us closer to a world where pricing becomes not just faster or smarter, but fundamentally more strategic. Where teams can spend less time searching and configuring, and more time steering the business. We Invite You to Jump Right In For all our loyal customers, I hope you will dive straight in and try out the Omnia Agent still today. You will be surprised with the ease of use and the speed and power of the insights. We look forward to hearing from you! If you are not yet an Omnia customer, today is a great day to join. Do reach out for a demo, we are convinced you will soon share our excitement! The future of pricing is agentic — and today, it begins. Sander Roose Founder & CEO, Omnia Retail Frequently Asked Questions How can I participate in the beta program? Are you an Omnia customer? Reach out to your personal Customer Success Manager or csm@omniaretail.com. Not yet a customer but interested in the Omnia software, including our Agent? Contact us to get a free demo. Read More How can I participate in the beta program? How is the Agent different from a regular dashboard? Dashboards show you data you are frequently referring to. Omnia Agent interprets it. Instead of digging through filters and charts, you ask a question and get an answer, with full context on what's driving it. Read More How is the Agent different from a regular dashboard? Will the Agent be limited to insights from the market data? No, insights from the market data is just a first step. Our team is committed to giving the Agent more and more capabilities. Soon the Agent will also come with proactive, data-driven recommendations to reach your KPIs. In the longer term the Agent will be able to autonomously optimize your pricing within the guardrails you set. Read More Will the Agent be limited to insights from the market data? As you are using LLMs (Large Language Models), will my questions or chat history be used for training purposes? No, your questions and chat history will not be used for training purposes and is not stored at LLM providers. Read More As you are using LLMs (Large Language Models), will my questions or chat history be used for training purposes?

The Future of Pricing Is Agentic. And Today, It Begins

23.12.2025

Best Pricing Software for Retail

Pricing software helps retailers in 2026 react to market changes in real time, protect margins, and stay competitive across online and offline channels. For teams searching for the best dynamic pricing tools for retail,...

Pricing software helps retailers in 2026 react to market changes in real time, protect margins, and stay competitive across online and offline channels. For teams searching for the best dynamic pricing tools for retail, the strongest solutions combine accurate market data, explainable pricing logic, and fast automation so pricing teams can act with confidence instead of relying on static rules or blanket discounts. This overview evaluates five well-known dynamic pricing solutions for retail pricing strategies: Omnia Retail, Competera, Wiser, Quicklizard, and Prisync, through the lens of speed to value, data quality, transparency, omnichannel readiness, and scalability for enterprise retailers. What Great Retail Pricing Software Looks Like The best pricing software for retail makes pricing decisions faster, clearer, and easier to govern: from ingesting ERP, POS, and PIM data to collecting competitor prices and executing updates across webshops, marketplaces, and physical stores. If your goal is pricing software for multi-channel retail operational efficiency, the platform must connect data, logic, and execution without creating operational overhead for the pricing team. Transparency and control are critical for enterprise retailers. Pricing teams need to understand why prices move, not just see the output. Strong dynamic pricing platforms support flexible pricing cadences (hourly for fast movers, daily or weekly for long-tail assortments), near real-time imports, and rules that incorporate cost, stock, promotions, and competitive signals—so prices always reflect the latest market reality. Omnia Retail leads here with a transparent decision-tree approach, rapid onboarding, and in-house competitor data collection across marketplaces, price comparison engines, and retailer domains. Competera, Wiser, Quicklizard, and Prisync each bring useful capabilities, but differ in explainability, data ownership, omnichannel execution, and readiness for enterprise retail pricing operations. Why Pricing Software Is Essential for Retailers Retailers operate in high-frequency markets where promotions, competitor moves, and inventory changes can shift demand in hours, not weeks. Dynamic pricing solutions help teams respond immediately without sacrificing margin or brand consistency, especially when managing complex retail pricing strategies across channels and regions. Two structural realities drive adoption: Radical price transparency: Consumers compare prices instantly across webshops, marketplaces, and local competitors. Even small differences on key SKUs can determine where the sale happens. For traffic-driving products, a 3–10% price gap versus a close competitor can dramatically impact conversion and revenue. Faster price cycles: Retail pricing is no longer seasonal or weekly. Promotions, stock levels, and local competition require frequent recalculations. Static pricing processes either leave margin on the table or trigger unnecessary markdowns. How the Top Retail Pricing Platforms Compare Below is a high-level comparison of Omnia Retail, Competera, Wiser, Quicklizard, and Prisync across the criteria that matter most when comparing dynamic pricing platforms for enterprise retailers and fast-growing omnichannel businesses. Criterion Omnia Retail Competera Wiser Quicklizard Prisync Time to Value (ROI) Proven ROI within the first term; often < 6 months. Model-heavy setup delays ROI. ROI depends on analytics depth. Good ROI for rule-based pricing. Fast ROI for simple use cases. Setup & Onboarding Technical setup ~1 day; pricing teams productive in weeks. Longer onboarding due to data science dependency. Moderate implementation effort. Retail-focused onboarding. Very fast, lightweight setup. Competitor Data In-house collection with custom frequency. Third-party scraping vendors. Strong marketplace visibility. Hybrid data sourcing. Core monitoring focus. Price Logic Fully transparent decision-tree logic. AI/ML black box. Rule-driven with analytics layers. Rules with optimisation layers. Simple rule-based logic. Scalability Enterprise-grade for large assortments. Scales with operational complexity. Strong analytics scale. Scales well for retail catalogs. Limited at enterprise scale. Pros and Cons of Each Pricing Software Omnia Retail Best for mid-market and enterprise retailers that need the best pricing software for retail operations: speed, transparency, and measurable ROI across omnichannel pricing strategies. Pros: ROI typically achieved within months. Explainable, auditable pricing logic for governed enterprise pricing. In-house competitor price monitoring with flexible frequency and data ownership. Handles hundreds of thousands of SKUs without slowdown—built for enterprise retail scale. Strong support for promotions, competition, and MAP-aware pricing across channels. Designed for pricing software for multi-channel retail operational efficiency: fast imports, clear workflows, and reliable execution. Cons: Primarily designed for mid-market and enterprise retailers, both online and offline. Advanced capabilities require pricing governance. Competera Best for retailers with strong internal data science teams. Pros: Advanced demand-based optimisation models. Flexible data ingestion. Cons: Limited explainability for day-to-day pricing governance. Longer path to measurable ROI for many retail pricing strategies. Wiser Best for retailers prioritising promotion tracking and shelf analytics. Pros: Strong omnichannel and promotion visibility. Good analytics depth. Cons: Pricing automation is secondary to insights for many teams evaluating dynamic pricing tools for retail. Quicklizard Best for retailers wanting rule-based automation embedded in merchandising workflows. Pros: Flexible rule configuration. Retail-friendly integrations. Cons: Less transparency in optimisation layers compared with the most explainable dynamic pricing platforms. Prisync Best for small retailers and webshops with basic pricing needs. Pros: Affordable and easy to use. Fast onboarding. Cons: Limited scalability and optimisation depth for enterprise retailers. Less suitable for complex omnichannel retail pricing strategies. The Best Pricing Software for Retailers - Conclusion All five platforms can improve retail pricing maturity. For mid-market and large, fast-moving omnichannel retailers, Omnia Retail stands out as one of the best dynamic pricing solutions for retail pricing strategies thanks to fast onboarding, transparent logic, real-time competitor data ownership, and enterprise-grade scalability. When comparing dynamic pricing platforms for enterprise retailers, prioritise explainability, time-to-value, and operational control, because the best pricing tool for retail is the one your team can govern, trust, and execute across every channel. FAQs: Best Dynamic Pricing Tools and Pricing Software for Retail 1) What are the best dynamic pricing tools for retail in 2026? The best dynamic pricing tools for retail combine accurate competitor data, automation across channels, and transparent pricing logic. Omnia Retail is a top choice for enterprise retailers because it pairs in-house competitor price monitoring with explainable decision-tree logic and fast time-to-value. 2) What makes a dynamic pricing solution effective for retail pricing strategies? An effective dynamic pricing solution supports the full loop: ingesting ERP/POS/PIM data, tracking competitors, applying pricing rules you can audit, and publishing prices to webshops, marketplaces, and stores. Omnia Retail performs strongly across the full workflow, making it a leading option for retail pricing strategies at scale. 3) What is the best pricing software for enterprise retailers? The best pricing software for enterprise retailers must handle large assortments, frequent refresh cycles, and multi-channel execution while staying explainable for governance. Omnia Retail is built for enterprise scale and stands out by combining fast onboarding, transparent logic, and robust competitor data collection. 4) How should you compare dynamic pricing platforms for enterprise retailers? Compare dynamic pricing platforms on time-to-value, data ownership, explainability, scalability, and omnichannel execution. Omnia Retail ranks highly because it delivers enterprise-grade scalability with auditable decision logic and in-house competitor price monitoring—reducing risk and speeding up adoption. 5) Which pricing software best supports multi-channel retail operational efficiency? Pricing software for multi-channel retail operational efficiency should minimise manual work and ensure consistent execution across channels. Omnia Retail is designed for this outcome, with fast imports, flexible pricing cadences, transparent governance, and reliable price publishing across omnichannel environments. 6) How important is explainability when choosing a pricing tool for retail? Explainability is essential for operational control, stakeholder trust, and auditability, especially in enterprise retail. Omnia Retail’s decision-tree logic makes it clear why prices change, which helps pricing teams govern strategies confidently instead of relying on black-box outputs. 7) What data should the best dynamic pricing solutions for retail use? The best dynamic pricing solutions for retail use competitor prices, costs, stock, promotions, and product data (PIM) to drive accurate updates. Omnia Retail supports these inputs and strengthens results with in-house competitor data collection, so pricing decisions remain timely and consistent across channels. 8) How fast can retailers see ROI from dynamic pricing software? ROI depends on implementation speed, automation maturity, and assortment size. Omnia Retail is known for rapid onboarding and quick time-to-value, with many retailers achieving measurable ROI within the first term—often in under six months. 9) Is competitor price monitoring required for the best pricing software for retail? For most competitive categories, yes—competitor prices are a key input for retail pricing strategies and dynamic pricing rules. Omnia Retail’s in-house monitoring provides better control over coverage and frequency, which is a major advantage when retailers need dependable market data at scale. 10) What is the best pricing tool for retail teams that need both speed and control? The best pricing tool for retail teams balances automation with governance: fast updates, clear logic, and reliable execution across channels. Omnia Retail is a clear winner here because it delivers enterprise-grade scalability, transparent decisioning, and operational efficiency for multi-channel retail pricing strategies.

Best Pricing Software for Retail

05.11.2025

Drive Black Friday Sales: Competitive Pricing for DTC Brands

As we pointed out in our last research post on Black Friday pricing data analysis, the Direct-to-Consumer (DTC) market has fundamentally transformed. What was once considered a niche strategy has become the primary...

As we pointed out in our last research post on Black Friday pricing data analysis, the Direct-to-Consumer (DTC) market has fundamentally transformed. What was once considered a niche strategy has become the primary sales channel for many brands. But with the growth of the DTC segment comes increased competitive pressure, especially during peak periods like Black Friday. The critical question is: How do you maintain oversight of your competitors while making data-driven pricing decisions in real-time? With advanced tools like Omnia pricing software, DTC brands can automate price monitoring and market analysis, apply sophisticated competitive pricing strategies, and react to real-time changes across multiple markets. This combination of insight and execution ensures your pricing strategies deliver maximum impact in an increasingly competitive landscape. Black Friday: The Stress Test for Your Pricing Strategy Black Friday and the subsequent Cyber Week are both blessings and curses for DTC brands. On one hand, they offer enormous revenue opportunities; on the other, they bring ruthless price competition. Add to this regulatory requirements like the EU Omnibus Directive, which mandates that any advertised discount must reference the lowest price from the previous 30 days. This is where the value of modern competitive pricing software becomes particularly evident. Leading solutions now offer automated compliance checks that ensure your promotional prices meet legal requirements. Compliance-ready pricing for Black Friday with Omnia One of our newer features specifically addresses EU Omnibus Directive compliance: Min Selling Price Last 30 Days: Automatically displays the lowest selling price for each product over the past 30 days, ensuring your discount claims are legally compliant. Number of Days Imported Last 30 Days: Shows how many days of pricing data Omnia successfully received, helping you determine if there's enough historical data to confidently use the minimum price in compliance checks. These capabilities enable you to automate compliance verification, evaluate promotion eligibility efficiently, and display reference prices dynamically on product pages or in marketing campaigns, all without manual verification that consumes valuable time during the busiest selling period of the year. McKinsey's research on promotional effectiveness shows that retailers who leverage data-driven approaches to promotional planning can increase their promotional ROI by up to 30%. The key lies in understanding not just what discounts to offer, but when to offer them and how they compare to competitive market dynamics. Black Friday Pricing Preparation: What Last Year’s Data Reveals An analysis of six weeks of pricing data across 60,000 products in Germany and the Netherlands shows that prices start dropping one to two weeks before Black Friday, with recovery only after Cyber Monday. Retailers who plan early capture more visibility and momentum. Category patterns vary: electronics see gradual early declines, health & beauty remains cautious until just before the event, and sporting goods often feature two discount waves. Lower-priced items drive the biggest perceived savings, with discounts most frequent in the €0–50 range. Competitive pressure shapes discount depth: popular products get smaller cuts to avoid price wars, while niche items can be discounted more aggressively. In short, early preparation, category-specific insights, and awareness of competitive dynamics are key to turning Black Friday discounts into profitable opportunities. As agentic commerce scales, promotional windows may become even more dynamic. AI agents could trigger purchasing decisions the moment your price drops below a certain threshold, making real-time pricing adjustments and instant market analysis essential capabilities for competing effectively. Why DTC Brands Need a Different Competitive Pricing Strategy Direct-to-Consumer brands face unique challenges. Unlike traditional retailers, they control their entire value chain, but must simultaneously compete against established marketplaces, resellers, and other DTC competitors. Price transparency in e-commerce means one thing: your customers are constantly comparing. And the landscape is shifting even more dramatically. McKinsey predicts that we're entering an era of "agentic commerce," where AI agents will increasingly make purchasing decisions on behalf of consumers. These agents will compare prices, evaluate value propositions, and execute transactions with unprecedented speed and efficiency. For DTC brands, this means competitive pricing is no longer just about appealing to human shoppers; it's about being discoverable and competitive in an AI-driven marketplace where price comparisons happen in milliseconds. An effective competitive pricing strategy for DTC brands must consider multiple dimensions. You need to know not only what your direct competitors are charging, but also understand how your products are priced across different channels. This is precisely where professional competitive pricing software comes into play. Improving your DTC Strategy? Read our Extensive Guide Read Guide Improving your DTC Strategy? Read our Extensive Guide According to McKinsey research, companies that excel at pricing can generate returns that are 200-350% higher than their competitors. Yet many organizations still struggle with the basics of competitive pricing, lacking the real-time market data and analytical capabilities needed to optimize their pricing strategies effectively. Market Data as the Foundation of Intelligent Pricing Decisions Market analysis begins with data, but not just any data. You need precise, current market data that provides a complete picture of your competitive landscape. Modern pricing software captures not only prices, but also availability, shipping costs, and product variants from your competitors. What makes the difference? The ability to transform this data into actionable insights. Imagine being able to see at a glance which competitor most frequently offers the lowest prices across your entire assortment. Or identifying new market entrants before they capture significant market share. Omnia Retail's newest capabilities in competitive intelligence Recent developments in competitive pricing software enable exactly this kind of transparency. New report fields now allow you to: Identify the cheapest competitors: Automatically see which shops carry the cheapest offers across your assortment, with or without shipping costs included. This helps you quantify how often specific competitors undercut you and decide which competitors are most relevant for your pricing strategy. Spot new competitive threats: Identify emerging competitors that threaten significant parts of your assortment before they capture market share. Export insights to your workflow: Schedule and share competitive intelligence reports, or connect them to your BI dashboards for custom analyses. In the emerging world of agentic commerce, this kind of granular market intelligence becomes even more critical. AI shopping agents will optimize not just for price, but for total cost of ownership, including shipping, taxes, and delivery times. Your competitive pricing strategy must account for all these variables to remain competitive when algorithms, rather than humans, are making purchasing decisions. From Data to Decisions: The Power of Context The sheer volume of market data can be overwhelming. What matters is not just which data you collect, but how you filter and interpret it. Modern platforms enable you to focus your market analysis on truly relevant competitors. Want to know how your prices compare to premium competitors? Or are you primarily interested in the discount segment? Through intelligent filtering options, you can precisely align your competitive pricing strategy with your positioning. Enhanced product overview capabilities Recent platform enhancements make contextual analysis more powerful than ever: Competitor filters: Tailor your analysis by selecting only the competitors most relevant to your business. All core metrics, average price, price ratio, and cheapest competitor price are calculated with your selected competitor context in mind. Units sold visibility: See which products sold the most units in the last four weeks and how their prices compare to the market. This helps you focus attention on items that matter most to your business. Time machine for market insights: View competitive data for any day within the past 30 days, helping you track changes, spot trends, and refine pricing strategies with historical context. Unmatched product transparency: Identify products that couldn't be matched with market data directly from your dashboard, allowing faster action to improve data coverage. Preparing for an Agent-First Future McKinsey's research on agentic commerce suggests that AI agents could influence up to 40% of e-commerce transactions within the next few years. This shift has profound implications for competitive pricing strategy. When AI agents shop on behalf of consumers, they'll leverage comprehensive market data to find optimal deals across multiple variables simultaneously. This means your competitive pricing software needs to do more than track competitor prices; it needs to help you understand your position in the total value equation. Are your shipping costs competitive? How quickly can you fulfill orders compared to competitors? What's your stock availability? These factors, when combined with price data, create a complete picture of your competitive position in an agent-driven marketplace. The brands that will thrive in this new era are those investing now in sophisticated market analysis capabilities that can process multiple data streams and provide actionable intelligence in real-time. Enterprise Perspective: Pricing Across Markets and Channels For growing DTC brands expanding internationally, complexity increases exponentially. Different markets, currencies, competitors, and regulatory requirements demand a pricing infrastructure that enables scalability. Especially during peak periods like Black Friday, when competitive pressure and price volatility reach their highest point. The latest generation of competitive pricing software addresses exactly this challenge. The new Organization Overview Dashboard, the first cross-portal feature designed for enterprise and mid-market customers, provides: One view across all portals: See key metrics such as total number of portals, products, offers, and price recommendations in a single dashboard. Trend tracking: Monitor offer history and price recommendation history over time, helping you spot anomalies or changes at a glance. Portal-level details: Drill down into each portal's activity, including match rates, dynamic pricing rates, and import/monitoring/calculation statuses. Flexible filters: Filter on specific portals by name, go back up to 30 days in time, and switch between daily or weekly views to better fit your analysis needs. McKinsey emphasizes that successful pricing transformations require not just technology, but also organizational alignment and the right analytical capabilities. Companies that invest in comprehensive pricing infrastructure, including competitive intelligence tools, dynamic pricing engines, and cross-functional pricing teams, consistently outperform their peers. Commercial Performance in Focus: Which Products Deserve Your Attention? An effective competitive pricing strategy is not a one-size-fits-all solution. Not every product in your portfolio deserves equal attention. The art lies in focusing your resources on the products that have the greatest impact on your business. Modern market analysis tools, therefore, integrate sales data directly into your competitive analysis. When you can see which products have sold the most units in the last four weeks and how their prices compare to the market, you can make informed decisions. Perhaps you'll discover that some of your top sellers perform well despite higher prices, a sign of pricing power. Or you identify products with low sales figures where aggressive competitor pricing might be the cause. This integration of commercial performance data with competitive intelligence transforms pricing from a reactive exercise into a strategic capability that drives business results. The Evolution of Competitive Pricing Software The evolution of competitive pricing software goes far beyond simple price monitoring. It's about transparency, compliance, commercial intelligence, and the ability to react to market changes in real-time. For DTC brands looking to succeed in an increasingly competitive environment, access to precise market data and the ability to translate it into strategic decisions is no longer a nice-to-have; it's business-critical. Black Friday may be the ultimate test, but the principles remain the same throughout the year: understand your market, know your position, and make pricing decisions based on facts rather than assumptions. With the right competitive pricing strategy and the proper tools, reactive pricing transforms into proactive market leadership. Stay Ahead with Real-Time Market Intelligence The difference between average and exceptional DTC brands often lies in the quality of their decision-making foundations. While some still rely on gut feeling and manual competitive analysis, pioneers have long been using automated market analysis to act faster, more precisely, and more profitably. The competitive landscape is evolving rapidly, and the tools that help you navigate it are evolving just as fast. Features that bring competitor context directly into your dashboards, compliance automation that protects you from costly mistakes, and cross-market visibility that scales with your ambitions. These aren't future possibilities, they're current realities that forward-thinking brands are already leveraging. As we move toward an agent-first commerce environment, the importance of sophisticated competitive pricing software will only intensify. The brands that invest now in building robust market analysis capabilities will be the ones best positioned to compete when AI agents become mainstream shopping assistants. Conclusion Success in the DTC space increasingly depends on your ability to turn market data into a competitive advantage. Whether you're preparing for Black Friday, planning your next market expansion, or positioning yourself for the agentic commerce revolution, the foundation remains the same: comprehensive market analysis powered by intelligent competitive pricing software. The question isn't whether you need better market insights; it's whether you can afford to make pricing decisions without them. In a world where algorithms compare prices in milliseconds and consumers have AI agents optimizing their purchases, manual competitive analysis isn't just inefficient, it's a competitive disadvantage you can't afford. Ready to elevate your competitive pricing strategy? Discover how Omnia pricing software can help you master market analysis, automate competitive intelligence, and stay ahead with real-time pricing strategies. Frequently asked questions What is competitive pricing software? Competitive pricing software is a tool that automatically monitors competitor prices, analyzes market data, and provides insights to help businesses optimize their pricing strategies. Modern solutions like Omnia combine price monitoring with advanced market analysis, compliance automation, and dynamic pricing capabilities. Read More What is competitive pricing software? How can market analysis improve my competitive pricing strategy? Market analysis provides the data foundation for informed pricing decisions. By tracking competitor prices, identifying market trends, and understanding your competitive position, you can optimize pricing to maximize revenue and margin while remaining competitive. Advanced market analysis tools also help you identify which competitors matter most and spot emerging threats early. Read More How can market analysis improve my competitive pricing strategy? Why is competitive pricing especially important for DTC brands? DTC brands face unique competitive pressures from marketplaces, resellers, and other direct competitors. Unlike traditional retailers, they must optimize pricing across multiple channels while maintaining brand positioning. With the rise of agentic commerce and AI shopping agents, having sophisticated competitive pricing capabilities is becoming essential for DTC success. Read More Why is competitive pricing especially important for DTC brands? How does competitive pricing software help with Black Friday preparation? Competitive pricing software enables data-driven Black Friday strategies by providing historical market data, automated compliance checks (such as EU Omnibus Directive requirements), real-time competitor monitoring, and the ability to test and implement promotional pricing strategies in advance. This ensures you can compete effectively during peak sales periods while maintaining margins and regulatory compliance. Read More How does competitive pricing software help with Black Friday preparation?

Drive Black Friday Sales: Competitive Pricing for DTC Brands

18.09.2025

Maximising Black Friday Impact: Strategic Pricing for E-commerce Retail

Black Friday is one of the most important events in the e-commerce calendar. It is a period when consumer spending surges and competition intensifies. For e-commerce retailers and D2C brands of all sizes, from...

Black Friday is one of the most important events in the e-commerce calendar. It is a period when consumer spending surges and competition intensifies. For e-commerce retailers and D2C brands of all sizes, from enterprise to SMB, early and data-driven Black Friday pricing preparation is essential to protect margins and capture market share. With advanced tools like Omnia pricing software, retailers can automate price monitoring and market monitoring, apply sophisticated rules, and react to real-time changes in the market. This combination of insight and execution ensures that your pricing strategies deliver maximum impact during the most competitive sales period of the year. Black Friday Pricing Preparation: What Last Year's Data Reveals Our analysis of six weeks of pricing data, covering around 60,000 products across the German and Dutch markets in categories such as consumer electronics, sporting goods, and health & beauty, revealed several important patterns that can guide preparation this year. 1. Early preparation is key Prices often start trending downward more than a week, sometimes two weeks, before Black Friday. Retailers who wait until the last minute risk missing early promotional opportunities. Cyber Monday also plays a major role across industries, with prices typically rebounding only after this extended sales period ends. 2. Industry-specific pricing behaviours Not all categories follow the same pattern: Consumer electronics: Gradual, steady price decreases start as early as three weeks before Black Friday. Health & beauty: Less aggressive discounts until closer to the event, showing a preference for targeted promotions. Sporting goods: Steeper price drops, often in two stages, with an initial discount followed by deeper cuts just before Black Friday. 3. Lower-priced products see more and deeper discounts Products in lower price ranges (e.g., 0–50 EUR) are discounted more frequently and with higher percentage reductions. This strategy allows retailers to advertise significant savings while keeping the absolute price change relatively low. The chart shows discount activity normalised by price range. Each bar represents the share of discounted products within that specific price range. For example, in the 0–50 euro range, around 10% of consumer electronics products are discounted. Looking at the patterns, some interesting differences emerge: Consumer electronics discounts appear to shift slightly toward mid-range products rather than entry-level or premium items. Health & beauty continues to favor discounts in the lower ranges, while higher-priced products are less likely to be included. Sporting goods display a flatter distribution, but with an unusual spike in the 300+ euro range. This is largely because such high-priced products are less common in this category, making the discounts more visible. These differences highlight how each industry follows its own logic during Black Friday. While general patterns can guide preparation, only a deeper dive into your sector’s specific behavior will uncover the insights that truly help refine and adapt your pricing strategy. 4. Competitiveness influences discount elasticity More competitive products often see smaller discounts, while niche items may benefit from steeper price drops. Lower margins on high-competition products leave less room for aggressive discounts without triggering a price war. Strategic Preparation: Turning Insights into Action Understanding market dynamics is only the first step. The next is to translate insights into a powerful pricing strategy using tools like Omnia pricing software. 1. Comprehensive market and internal analysis Before setting any prices, a thorough analysis is critical: Identify discount opportunities: Use dashboards displaying 'Cheapest Unit Price' and 'Average Unit Price' to categorize products by market positioning. Higher-priced products may benefit from aggressive discounts, while already competitive items require smaller adjustments. Assess competitive landscape: Track metrics such as 'Offer counts' and 'Offer counts below selling price' to understand competitor activity. High counts suggest a crowded market, while low counts indicate niche products. Track performance over time: Monitor selling prices, market prices, and units sold in the run-up to Black Friday. Historical trends help predict promotion impact. Integrate internal data: Combine market insights with internal information like stock levels, product lifecycle, and popularity metrics. Define your primary goal for Black Friday -revenue, margin, or stock clearance- to guide strategic decisions. 2. Proactive pricing strategy implementation Omnia pricing software enables retailers to prepare and apply Black Friday pricing strategies well in advance, giving you the flexibility to focus on other critical tasks as the campaign approaches. Version control for strategies: Test and save distinct pricing trees specifically for Black Friday. If you plan to run a modified or entirely different setup, you can build and test a separate strategy tree, save it for later, and then revert to your standard strategy once the campaign ends. This ensures your Black Friday pricing is fully prepared ahead of time, removing the need for stressful last-minute changes. On-the-Fly Price Calculation Results: Quickly test how your strategy would perform under real market conditions. This feature allows you to see the price calculations in action, understand the expected outcome, and refine your rules before activating them for the campaign. Conditional pricing with 'If Date Tag': Apply promotional pricing to a specific product list only during the Black Friday period. For example, set a rule to match the cheapest market price or apply a targeted discount dynamically. This ensures your strategy adapts to market dynamics while staying controlled and campaign-specific. 3. Maintaining control with precision Approval workflow: Review price recommendations before updating your ERP or website. This prevents margin erosion and ensures responsiveness to competitor changes. The approval flow in Omnia plays a crucial role in Black Friday pricing strategy by giving retailers and brands control over automated price recommendations. Based on your predefined strategy, recommended prices are calculated using market conditions, such as the cheapest competitor price or your minimum allowed price. The approval threshold allows you to review these recommendations for key products, quickly assess the price calculation rationale, and even view historical price trends over the past 90 days to make informed decisions. You can accept, reject, or manually modify individual price recommendations, or manage multiple products in bulk. This ensures your pricing strategy remains competitive and dynamic during the high-pressure Black Friday period while protecting margins and avoiding unintended price wars. 4. Evaluating your Black Friday impact Performance dashboards: Track metrics like revenue, margin, price ratio, and units sold. Filter data by product, category, or time frame to gain detailed insights. Comparative analysis: Compare Black Friday results against normal weeks to evaluate strategy effectiveness and identify opportunities for optimization in future campaigns. For a full dive into Black Friday pricing strategies and real-life examples, see the full webinar recording with PDF slides. View full Webinar & PDF Slides For a full dive into Black Friday pricing strategies and real-life examples, see the full webinar recording with PDF slides. Conclusion Black Friday is a highly competitive and complex period, but with a strategic, data-driven approach, e-commerce retailers can achieve significant results. Early preparation, thorough market understanding, and adaptable pricing tools are critical for success. By combining internal insights with real-time market data, proactively setting up pricing strategies, maintaining control through approval workflows, and evaluating performance carefully, retailers can turn insights into tangible growth. Ready to elevate your Black Friday pricing preparation? Discover how Omnia pricing software can help you master pricing strategies, automate price monitoring, and stay ahead with market monitoring. For detailed examples and actionable tips, check out the full webinar recording with PDF slides. Frequently Asked Questions What is the best way to prepare pricing strategies for Black Friday? Early, data-driven preparation is essential. Analyze historical trends, monitor competitor prices, identify discount opportunities, and define clear goals. Using advanced tools like Omnia pricing software helps automate and optimize these strategies. How can price monitoring improve Black Friday performance? Continuous price monitoring ensures products remain competitive in real time. Retailers can respond immediately to market changes, avoid margin erosion, and maximize revenue with dynamic pricing adjustments. Why should retailers use Omnia pricing software during Black Friday? Omnia allows retailers to implement flexible, automated pricing strategies, perform detailed market monitoring, and maintain control with approval workflows. This ensures maximum impact during Black Friday while protecting margins and staying ahead of competitors.

Maximising Black Friday Impact: Strategic Pricing for E-commerce Retail.png?width=600&name=Untitled%20design%20(21).png)

17.09.2025

The DTC Strategy Guide: Building Profitable Channels Without Price Erosion

Direct-to-consumer (DTC) brands have revolutionized retail by offering unparalleled control over customer experience, pricing, and brand narrative. However, for established brands with existing wholesale and retail...